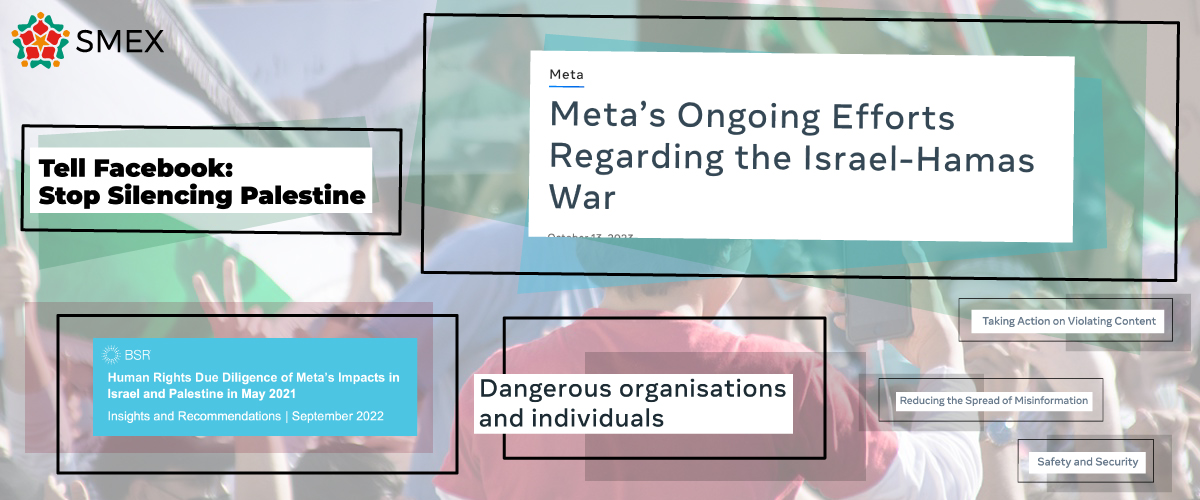

On October 13, Meta released a statement outlining a series of measures introduced by the company to moderate harmful content on its platforms during Israel’s ongoing war on Gaza, following the October 7 attacks by Hamas.

Meta’s statement was published in a moment of crisis, in the midst of a genocide against Palestinians in Gaza. Although the company had ample time to adjust these policies, in times of relative “peace,” by implementing the Business for Social Responsibility (BSR) recommendations on the May 2021 crisis in Israel and Palestine, it failed to do so. BSR, a business network and consultancy, published these recommendations in the course of a due diligence exercise focused on Meta’s responsibility under the United Nations’ Guiding Principles on Business and Human Rights (UNGPs) in relation to the May 2021 Sheikh Jarrah evacuation.

Meta’s recent statement opens up with a faulty framing of the situation that erases the context of the October 7 attacks, reducing the events into an act of “shocking” and “horrifying” terrorism that replicates western media’s inadequate and unethical reporting on the events in Israel and Palestine. Meta chooses an explicit language to express its bias against Palestinians: it refers to the October 7 events as “the brutal terrorist attacks by Hamas on Israel,” while using the much milder and nuanced language of “Israel’s response in Gaza.”

In September 2022, the BSR document cited a series of rather specific recommendations to Meta, in line with the UNGPs on Business and Human Rights. They address various issues with the company’s previous failures in 2021, ranging from the implementation of its Dangerous Organizations and Individuals (DOI) policy to the linguistic demands of a volatile situation. BSR published its recommendations for Meta exactly one year ago, after the Sheikh Jarrah incidents in 2021, when many organizations called Meta to conduct an independent audit regarding content moderation.

However, the company has yet to put them to good use. Had the reports’ findings—which Meta itself had commissioned—been taken into account over the past twelve months, digital rights violations against Palestinian voices would not be taking place during this round of Israeli aggression in Gaza and the West Bank.

A recent Wall Street Journal leak also testifies that the recommendations have not been implemented.

Here is what needs more clarification and reworking in Meta’s newly released measures.

- Meta claims to have established a special center with a team of “fluent” Arabic and Hebrew speakers to quickly fact-check content on its platforms. This can serve as no guarantee for their abilities to understand content and context, as the threshold for “fluent” does not necessarily mean “native”. Besides being merely “fluent,” we do not know if the human moderators clearly understand the Levantine dialect. With complex languages and the different dialects in both Arabic and Hebrew, it is absolutely vital that human moderators can profoundly grasp and comprehend the local cultural nuances of the language they are moderating. Lack of personnel cannot be an excuse for a multi-billion company. According to the WSJ, Meta told its Oversight Board in September that the goal of “having functioning Hebrew classifiers” was “complete.” A statement that was later denied by Meta said it did not have enough data for the system to function adequately but has now deployed it against hateful comments.

- Similarly, there is no proof or additional information that this team is in fact unbiased. First, Meta has never disclosed any information relevant to the hiring process of moderators. Such a disclosure would provide us with insight into the level of bias among the moderators. Even more so now, amid the atrocities taking place, Meta needs to be fully transparent about the training that moderators went through in order to achieve optimal impartiality. Situations like the present one involving a volatile area where conflicts erupt constantly and consistently are in dire need of a specific and systematic approach. This means employing moderators who possess invaluable knowledge and background on the historical and political context of the region in question.

- Human moderators are expected to act on Meta’s already existing policies; therefore, they have limited possibility of maneuvering. Meta reminds us that Hamas is designated by the US government as both a Foreign Terrorist Organisation and Specially Designated Global Terrorists. Therefore, it is also designated under Meta’s Dangerous Organizations and Individuals (DOI) policy. It is one of the first times Meta acknowledges publicly that an organization is part of its DOI list, a list we know nothing of. The only publicly available information about this list and its content is a leaked version published by the Intercept in 2021.

- By following US geopolitical standards, Meta’s DOI list consists of entities mainly from the MENA region (70% in the first tier are Arabic-speaking entities), arguably due to connections with US-designated terrorism. This is already a biased decision. The 2022 BSR report reiterates that during the May 2021 crisis, Meta over-moderated pro-Palestinian content, whereas under-moderated pro-Israeli content, by erroneously removing or upholding content. Additionally, the report revealed discrepancies in the company’s ability to process content in Arabic and in Hebrew. Meta’s automated systems tended to proactively detect more potentially violating content in Arabic than in Hebrew, thus widening the gap of equal treatment of both sides.

- The same transparency guarantees should also apply to fact-checkers. In their statement about current efforts, Meta, in very broad terms, mentions the use of third-party fact-checkers in the region, again failing to construct an explicit image of both the profile and the background, as well as the day-to-day activities/tasks of these fact-checkers.

- The company’s mention of a “special operations center staffed with experts” does not include any further details about the said experts’ actual subject of expertise nor their exact number. Such vague phrases cannot serve per se as guarantees in these extraordinary circumstances. The current situation calls for an elaborate strategy to deal with instances of fake news, censorship, misinformation, and shadow-banning. Meta must be fully transparent about the methods used to ensure the impartiality of moderators who thoroughly understand the political and historical dimensions of the crisis. This entails Meta revealing the exact number of these experts, their area of expertise, as well as substantial proof that they possess the necessary tools to make sound moderation decisions that do not exclude the reportedly under-represented voices of Palestinians on its platforms.

- One way to achieve this is by employing local moderators who come from the region and are familiar with not only the linguistic nuances but also the historical, political, and cultural implications of words and phrases in both Arabic and Hebrew. It is crucial that civil society pressures Meta for more transparency about all aspects of the operation of its human moderators that could impact decision-making, such as the exact number of working hours, number of posts reviewed, etc.

- Taking into consideration Israel’s several attempts to manipulate public opinion with the dissemination of paid ads and fake news, such as its debunked claims of 40 beheaded children in Israel and the denial of bombing Al Ahli Arab Hospital in Gaza, Meta must offer absolute transparency on possible requests by third-parties, including state and non-state actors. Sharing with the public/users any such requests can help shed light on the broader context and contribute to users’ better understanding of how platforms deal with these situations.

- While emphasizing their desire to “give everyone a voice while keeping people safe” on their apps and that it is not their “intention to suppress a particular community or point of view”, Meta claims that due to higher volumes of content being reported, content that doesn’t violate platform policies may be removed in error. This goes ironically well hand in hand with the removal of Palestinian content and hashtags due to a “surprise bug” on Meta’s platforms that happened to malfunction during one of the worst Israeli attacks on Palestinians in Gaza in the past 16 years. According to WSJ leaks, Meta usually hides comments as ‘hostile speech’ when their systems are 80% certain. Part of their “temporary risk response measures” included lowering the threshold to just 25% certainty for Palestinian users. This further exacerbates Meta’s bias against Palestinians.

Meta’s silencing of Palestinians online is part of a larger campaign unfolding offline. Censorship, misinformation, and online safety all have real-world consequences.

Meta urgently needs to assume responsibility and fulfill its proclaimed promise to all its users: a safe space for everyone to express their views, without censorship and its more insidious forms of throttling and glitching.