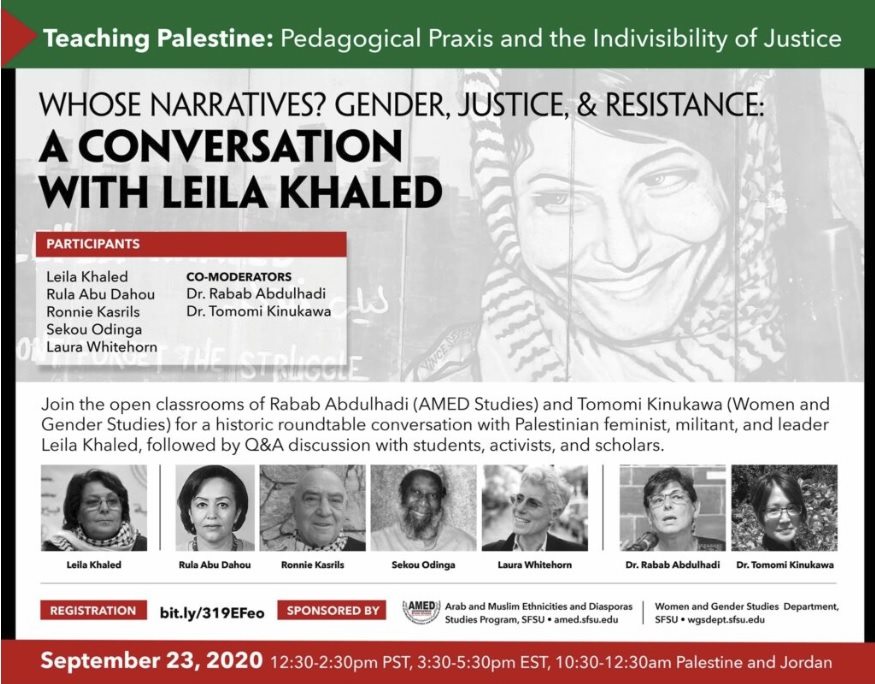

On September 23, Zoom cancelled a virtual event featuring the Palestinian Popular Front for the Liberation of Palestine’s (PFLP) veteran Leila Khaled. The virtual event was organized by the Arab and Muslim Ethnicity and Diasporas Studies (AMED Studies) at San Francisco State University (SFSU). After the event was announced on Facebook, certain Jewish and Zionist groups launched campaigns and protests against the panel, due to Khaled’s membership in the PFLP, which the United States designated as a terrorist group in 1997, and her involvement in two hijackings in 1969 and 1970. In response to the pressure, the American videoconferencing platform prohibited the AMED Studies department from hosting the webinar on their platform, claiming it violates the company’s policy.

The event organizers at SFSU confirmed the cancellation by Zoom, stating “Zoom has threatened to cancel this webinar and silence Palestinian narratives.” After Zoom cancelled the event on their platform and Facebook removed the event from AMED’s page, the studies center hosted and streamed part of their session with Khaled on YouTube before the latter also shut down the livestream,

Zoom explained their reasonsing in response to the Lawfare Project, an American pro-Israel organization, which wrote a letter demanding that Zoom remove the event. The letter reads “In light of the speaker’s reported affiliation or membership in a U.S. designated foreign terrorist organization, and SFSU’s inability to confirm otherwise, we determined the meeting is in violation of Zoom’s Terms of Service (ToS) and told SFSU they may not use Zoom for this particular event.” In its ToS, Zoom states that it prohibits the use of their service when used “in violation of any Zoom policy or in a manner that violates applicable law, including but not limited to anti-spam, export control, privacy, and anti-terrorism laws and regulations and laws requiring the consent of subjects of audio and video recordings.”

AMED’s Facebook event cover.

Terms of Service vs. The Law

Zoom’s decision faced criticism from human rights activists and journalists. Jilian York, Director for International Freedom of Expression at the Electronic Frontier Foundation (EFF) told SMEX that the decision is more reflective of Zoom’s ToS than the law because American companies are not legally required to remove content from US-designated terrorist groups. “It is the company’s First Amendment right to ‘curate’ content however they want. A number of prominent US companies ban any group that the US government (the State Department in particular) deems a foreign terrorist organization.” York added that “in some cases they are obliged to remove groups or individuals designated by the Treasury Department.”

When it comes to Khaled’s case, York wondered “why these companies fall so easily in line with who the US calls a terrorist, particularly at a time when the US regime is considering labeling Antifa (Anti-fascist movement) and certain environmentalist groups as such.” York explained that it is ultimately a corporate decision because “US companies have the legal right to moderate content as they see fit – not only ‘terrorist’ content, but really, anything.”

Zoom’s user-base has grown exponentially recently because of the Covid-19 pandemic, but the company has previously come under fire for its content moderation decisions. Marwa Fatafta, the Middle East and North Africa (MENA) policy manager at AccessNow, agrees with York and told SMEX that the decision concerns the company’s ToS. “This is not the first event that Zoom canceled.” In June, Zoom shut down three accounts of US-based Hong Kong human rights activists after pressure from the Chinese government. The company claimed it was complying with local laws. “The question is, does Zoom have to be involved in content moderation? Should they manage the content in their sessions?”

Content moderation bias

The technology companies seem to prey on vulnerable communities, especially in the Arab-speaking region. Fatafta wondered in a tweet, following the news on Zoom’s ban of Khaled’s webinar, why “US companies cancel political speech and debate in/on MENA region while allowing white supremacists to run free on their platforms.”

Facebook and Youtube also refused to host AMED’s event featuring Khaled. Facebook’s decision, in particular, follows a pattern of censorship of Palestinian content. On September 23. 7amleh, a Palestinian digital rights organization, and other partners launched a campaign calling on Facebook and the Oversight Board to stop supporting Israel’s efforts to censor Palestinian voices on the platform and to remove Emi Palmor, Israel’s former Justice Ministry general director, from the Oversight Board. Specifically, 7amleh’s campaign demands Facebook’s Oversight Board promote “a record of upholding human rights, not of censoring oppressed communities like Palestinians living under brutal military occupation and apartheid.”

7amleh has documented Facebook’s biased content moderation policy against Palestinian content since 2018. In 2019, Facebook complied with 79% of the Israeli governments’ requests to remove user data although the company did not disclose its reason. The technology companies constantly face pressure to remove user-generated content from the region and they do, according to Fatafta. “The moderated content is usually of vulnerable communities from the region because the companies’ losses of removing the content of a vulnerable community are always less than facing a powerful player.”

This tech companies’ repression of marginalized voices further restricts “free” communication channels available to citizens in the region, where governments already infringe on civic space, both online and offline. “People in the region find the Internet and these platforms as a space to discuss political issues, organize events and protests, express their anger, among other issues,” explained Fatafta. “Now these platforms come to suppress their voices by content moderation.”

We call on Zoom to be more transparent about their content moderation

Zoom should develop a clear content moderation policy, instead of making ad hoc decisions and hiding behind vague clauses in its ToS. If Zoom is going to enter the moderation arena, they also need to develop detailed standards, ideally with the independent review of outside experts, and apply them evenly, not exclusively when they face pressure from interest groups. While Zoom’s commitment to release a transparency report is a first step, it can only be effective if accompanied by more detailed content moderation policies.

Moreover, Zoom and other major tech companies should stop applying a double standard to Palestinian content. From Facebook to Google Maps, 7amleh’s research has documented that this double standard exists. Too often, we have seen content from, or involving, Palestinians removed from these platforms with little sound reasoning and unfortunately Zoom has elected to follow this trend.