In April 2020, SMEX launched the Digital Safety Helpdesk to support internet users, activists, journalists, bloggers, and human rights organizations who have been affected by cybersecurity incidents in Arabic-speaking countries. The Helpdesk team tackles and tracks a wide range of cases, from lost devices or a malfunctioning website to online censorship, harassment, and doxxing. When it comes to content takedown on social media platforms, incidents are collected and analyzed to propose better policies to protect users’ freedom of expression online.

Since July 2020, SMEX’s helpdesk and its internal incident-handling system filter cases according to urgency and capacity from 9:00 AM to 12:00 AM, seven days a week. Those affected by digital rights abuses can reach SMEX Digital Safety Helpdesk through WhastApp or Signal (+961 81 633 133) and through email (helpdesk@smex.org). Visit the Digital Safety Helpdesk page (smex.org/helpdesk) for further information.

Cybersecurity Incidents

The digital landscape is becoming increasingly governed by each platform’s ‘terms of service,’ also known as ‘Community Standards,’ that regulate online content. Initially, this was meant to ensure a safe and open online space where millions of users could share and access information with the least harm possible. Nevertheless, these regulations are mainly devised to accommodate specific contexts without an adequate understanding of larger political, social and cultural nuances beyond their country of origin. At the same time, these standards are imposed via automated algorithms that are often prone to errors. Records verify a surging wave of online censorship of critical voices from our region as a result of “moderation mistakes.” This is where the Helpdesk can offer the most help. After cases are resolved, our team compiles the recorded cases and analyzes them to push for fair, context-specific policies that could reduce the recurrence of such incidents.

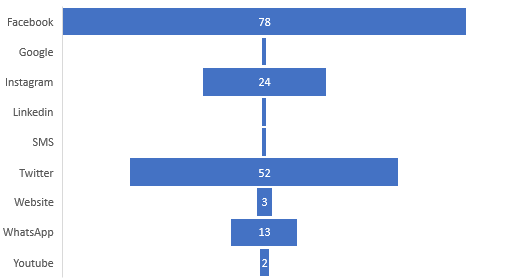

Graph (1): Number of tickets concerning different platforms between Jan 2021 and Jun 2021 depicted with Zammad.

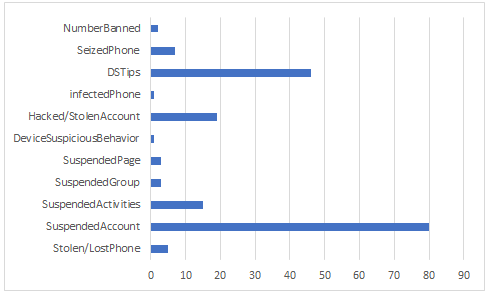

Graph (2): Number of Tickets categorized by ticket type

To keep track of the different incidents, the SMEX Digital Safety Helpdesk uses Zammad, an open source, user-support ticketing solution that allows the team to visualize the collected data, such as the type, frequency and on which platforms digital threats or violations occur.

Graph (1) shows the number of cases per platform, while graph (2) tracks the different categories these cases fall under, such as cyberbullying, hate speech, online harassment and oppressed freedom of expression. As evident in graph (2), incidents related to suspended social media accounts or activities are the most common.

Notably, the platform where most incidents occur is Facebook, as it is a popular social channel in the Arabic-speaking countries, followed by Twitter and Instagram. Facebook is commonly used in the Arab countries to share and express political opinions, as opposed to Instagram for example, which is usually used for more social/personal information sharing. This does not disregard the fact that Twitter is as equally used as Facebook, although digital security incidents are less recurrent on Twitter.

Facebook has only recently started taking into consideration the layered and complex realities of its users in the Arabic-speaking countries. Its content moderation approach still needs excessive research on the specificities of each country to better evaluate what it deems in line with its community standards and what it considers to be harmful content. In addition, its constantly changing community standards are tailored and specific to American laws and perspectives, making it difficult for the people in our region to express freely without their content being flagged as inappropriate.

Collecting cases to push for policy changes

The SMEX Digital Safety Helpdesk mainly supports people and activists online, while informing the public about digital rights violations in the region. The cybersecurity incidents reported to the Helpdesk team are studied and compared across data to ultimately influence tech companies and push for transparent data privacy and management policies. Cases that pose an immediate, physical threat to the user, such as doxxing, death threats, hate speech with incitement of violence, physical arrest or detention are handled with the highest priority.

Lucie Doumanian, Operations Manager at Social Media Exchange (SMEX), states that the Digital Safety Helpdesk’s demands for transparency, data protection, and freedom of speech online should ideally reach not only tech companies, but also national governments and regional organizations: “There should be policy advocacy on each of the three levels. The question is how to influence and push for policy changes on a national level if the state is not functioning properly. How can we advocate for digital rights when lobbying for them is not a realistic option under an unstable government?” In most Arabic-speaking countries, digital security and privacy are not among the governments’ top priorities. It is often the case that cybersecurity incidents are even propagated by the state, whether through online surveillance or prosecution of activists who use social media platforms to express dissent.

Nonetheless, our team continues to push for fair data policies both on the national and regional levels. The data collected by the Helpdesk becomes tangible evidence during pressure campaigns intended to advocate for greater transparency from both tech companies and local government-sponsored platforms. For instance, during the latest Israeli aggression against the Palestinian resident in the Sheikh Jarrah neighbourhood in May 2021, the online sphere witnessed an unprecedented solidarity with Palestinians, where millions were denouncing Israel’s policies of ethnic cleansing, apartheid and human rights violations on their social media accounts. As the Palestinian narrative was gaining momentum online, these users had their content removed and their accounts blocked, especially on Instagram and Twitter, in a blatant attempt to restrict the atrocities of Israel from getting exposed before the public. The Helpdesk played a crucial role in documenting suspended accounts as SMEX, along other regional and international digital rights organizations, entered into heated negotiations with these companies to confront them about these inhumane practices. We used the collected data to expose the faultiness of their moderation policies which proved to be biased and lacking in understanding the Palestinian context.

Digital safety for activists and journalists in Arabic-speaking countries

The Digital Safety Helpdesk at SMEX is a bridge between Arabic-speaking societies and the technology companies abroad. “Our priority has always been the civil space,” says Doumanian. Over the past two years, we have helped many protestors in protecting their data on digital devices while offering journalists and activists advice on how to enhance their digital safety and protection. At the same time, the Helpdesk team is in constant communication with tech companies regarding their moderation policies and content takedown in the region. Samar Al-Halal, Technology Unit Lead at SMEX who also handles the Helpdesk cases, explains the process as follows:

“When activists’ accounts are blocked, for example, we take the case directly to the company, such as Facebook or Twitter. We follow this procedure only when the person is included among our targets: journalists, bloggers and activists.”

Although most of the cases are from activists, journalists or internet users based in Lebanon, the Helpdesk team also handles cases from Egypt, Tunisia, Syria, Kuwait and the entire Arabic-speaking region.

How does SMEX’s Helpdesk work?

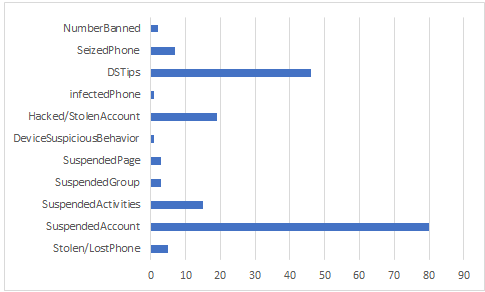

(Graph A) Number of Tickets categorized by Technical ticket type

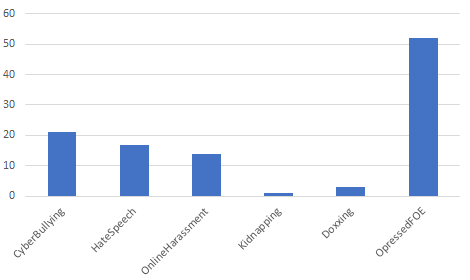

(Graph B) Number of tickets categorized under social violations

When the helpdesk receives a ticket on Zammad, our incident handlers categorize the tickets using the following taxonomy:

1) Specify the platform on which the issue occurs

2) Divide the incoming tickets by ticket type

After investigation, the Helpdesk Coordinator identifies the ticket’s type, whether it falls under Technical issues or Social Violations.

Based on the investigations and categorization, the Helpdesk Coordinator decides whether to escalate the case to the concerned tech company or not. Cases are escalated only if the user is among our target audience, which is one of the following:

Bloggers

Journalists

Human Rights Defenders

Civil Society Groups

Alternative Media

Political Collectives

Activists

Exceptions apply if the coordinator assesses the situation as “Real World Harm.”

Graph A shows the tickets that are under the Technical issue category, where many users contact the Helpdesk for digital security tips: “How to strengthen my password?” Or “How to activate 2FA on Instagram?” Others may contact the helpdesk to ask our Forensic Team for assistance in identifying if their devices had been hacked, and to ensure there is no malware injected, whether by mistake or intentionally to spy on them.

Where do we go from here?

For the current globalized digital world and for the new digital opportunities and challenges that are yet to come, our region is in urgent need of a coordinated network of digital watchdogs that protect freedom of speech and online expression.

We call on Facebook and Twitter to reach out to civil society organizations when developing their policies so that they are aware of the complexities of our region and how to create policies that would not criminalize content that to us is part of our cultural nuance. For example, a large number of suspensions take place based on the use of certain context-specific words in the Arabic language, such as the word “شهيد” meaning “martyr.”

In the light of similar haphazard censorship by the different tech companies, many questions demand crucial answers; Is all this suspension part of a larger plan to silence voices in the region? A region where clear political interests are invested in online growing dictatorships? And in case this is our reality, then where does content moderation and the sacred freedom of expression meet, if they meet at all in our universe?